Converged Platform

Converged is an open-source platform for manufacturing companies. It centralizes the management of entire equipment fleets — from 3D printers and CNC machines to robotic cells — and integrates all the business processes around them. Instead of device-specific utilities, the platform offers a cluster view of production: you run dozens of machines in different cities yet see a single picture of utilization, quality, and economics.

At the core sits a lightweight k3s cluster that can run on a handful of 2 GB Orange Pi boards or in the clouds of major providers. Nodes merge into a shared compute field, and LLM agents coordinate the work: they call microservice APIs, collect telemetry, schedule print queues, and guide operators on the next steps.

The platform is deployed as a set of modules. Microservices are designed to weigh only tens of kilobytes, load on demand, and keep the hardware footprint low. Business logic is moved into a DAG engine — a process builder where you assemble a chain of actions and hand it off to the agents. Customer data is isolated by workspace: every plant gets its own storage, encryption, and the option to move offline without friction.

Converged runs equally well on compact devices like an Orange Pi and in the cloud of a major provider. The minimum setup is 2 GB of RAM and a fast eMMC or SD card; that is enough to spin up a k3s cluster, link several nodes, and turn a fleet of printers into a single compute pool. This flexibility lets you launch the system in a remote workshop and then scale it into a global branch network without changing the architecture.

For self-hosted installations we hand over full control: you manage deployment, backups, and network availability, gaining independence and compliance with internal requirements in return. The cloud version removes operational overhead — our team handles access control, redundancy, and updates. A hybrid scenario is also supported: critical data stays on-premises, while the cloud acts as an update coordinator and a collaboration hub for distributed teams.

Frontends, backends, and integration blocks in Converged are packaged as modules that can be switched on or off without restarting the cluster. Each module is a compact JS bundle for Bun, so dozens of services occupy only a few megabytes and share a single library environment as if they were running inside one virtual machine. Hundreds of these bundles are grouped by workload type: everything tied to business logic can live in one container, while file operations sit in another. The platform stays modular without paying for it in memory consumption.

Orchestration is built on k3s, the lightweight edition of Kubernetes that is optimized for edge devices. We balance between consolidation and decomposition: services remain small enough to avoid turning into a monolith yet large enough not to inflate orchestration costs. Every application receives its own storage stack — key-value, SQL, file, and columnar. Customer data lives inside that customer’s workspace, with no shared multi-tenant database, which simplifies migrations and adds extra security guarantees.

We chose Bun as the foundation for server-side modules: it launches JavaScript and TypeScript quickly, saves memory, and works well on edge devices. Orchestration relies on k3s, a compact edition of Kubernetes that runs reliably on single-board computers and in data centers alike. For low-level tasks such as high-performance logging or machine protocol handling we bring in Zig — it gives us control over resources and integrates smoothly with the rest of the stack.

Our intelligent agents are built on top of the best models on the market. DeepSeek analyzes technical specifications, Claude estimates complexity and cost, ChatGPT talks with customers, Mistral optimizes manufacturing flows, and Gemini takes care of visual quality control. Predictive analytics sits on top: the platform monitors machine health, flags potential failures, and recommends scheduled maintenance.

The hardware layer is powered by industrial IoT. We collect telemetry with ESP32-based devices, stream the data into the cluster in real time, and immediately highlight alerts or bottlenecks. Visualizations, reports, and notifications are generated from the same data, so operators and managers see one consistent picture.

In Converged, LLMs act as dispatchers of the manufacturing ecosystem. We connect models through adapters: today it is GPT, Claude, DeepSeek, Mistral, and Gemini; tomorrow it can be any engine if customers need it. A model goes far beyond chatting — it pulls context from telemetry, fetches documents, aggregates metrics into one report, and tells people what is happening with an order or a batch of parts.

When an operator works in the interface, the LLM drives UI components, launches microservices, and, if needed, hands control over to the DAG engine. That is how scenarios are built: from “accept the order, calculate the cost, choose the machine” to “close the task once the machine passes quality checks.” The model decides which functions to invoke, while the platform ensures everything happens within established permissions and policies.

All actions follow the same access model used for humans — an LLM-adapted ABAC. Each model gets its own permission profile, and every step is logged: you can see why a service was called, what data was touched, and how the scenario ended. This lets you trust the LLM with routine work — it handles incoming orders, chooses the optimal route, and reports back in plain language — while you retain full control.

We moved business logic out of microservices into a dedicated DAG engine. It is similar to n8n but tailored for industrial workloads: you can assemble complex chains of lambdas, API calls, and decision nodes, then hand them off to Converged clusters for execution. This enables scenarios such as distributing prints across workshops, automating order checkouts, routing deliveries, and orchestrating SLA escalations.

The main advantage is flexibility. Plant managers can compose processes in a visual editor, while developers connect LLM agents that build graphs dynamically. Each cluster node can execute its own fragment of the process, so the load is spread evenly and even intricate chains run without delays.

We unify every machine behind a single interface. Adapters for Klipper, Marlin, industrial CNC, and robotic arms “wrap” each device and turn it into an industrial robot with a shared API. Converged therefore sees all equipment in the same way — regardless of whether a node is printing plastic, cutting metal, or assembling casings on a conveyor.

From there the production line comes to life. Through the DAG process builder you can link machines into a chain: the first prints a part, the second mills it, the third runs quality control, and the fourth assembles the product. The platform orchestrates this digital pipeline, assigns tasks to the right units, and automatically reacts if any stage needs human attention.

Operators no longer have to memorize dozens of interfaces. The status of any machine is available from a single window: see who is busy, who is idle, and how many cycles remain. If manual intervention is required, control is available from a laptop or tablet. Clustered slicing and distributed queues run in the background: the system constantly balances jobs, shifting them to free nodes so the fleet works evenly and without downtime.

Converged was designed for small compute nodes, so every module aims to consume as little memory as possible. Backend services are compact Bun packages that share the same library environment and start in milliseconds. We group them by workload type: instead of running hundreds of containers, a few combined blocks spin up, which lowers overhead and accelerates scaling.

The cluster uses all available CPU cores, distributing jobs across nodes in parallel. Each service keeps its storage lightweight: we limit indexes and avoid moving redundant data, so even hardware migrations are fast. As a result, a full production setup runs reliably with as little as two gigabytes of memory and scales horizontally whenever you need more capacity.

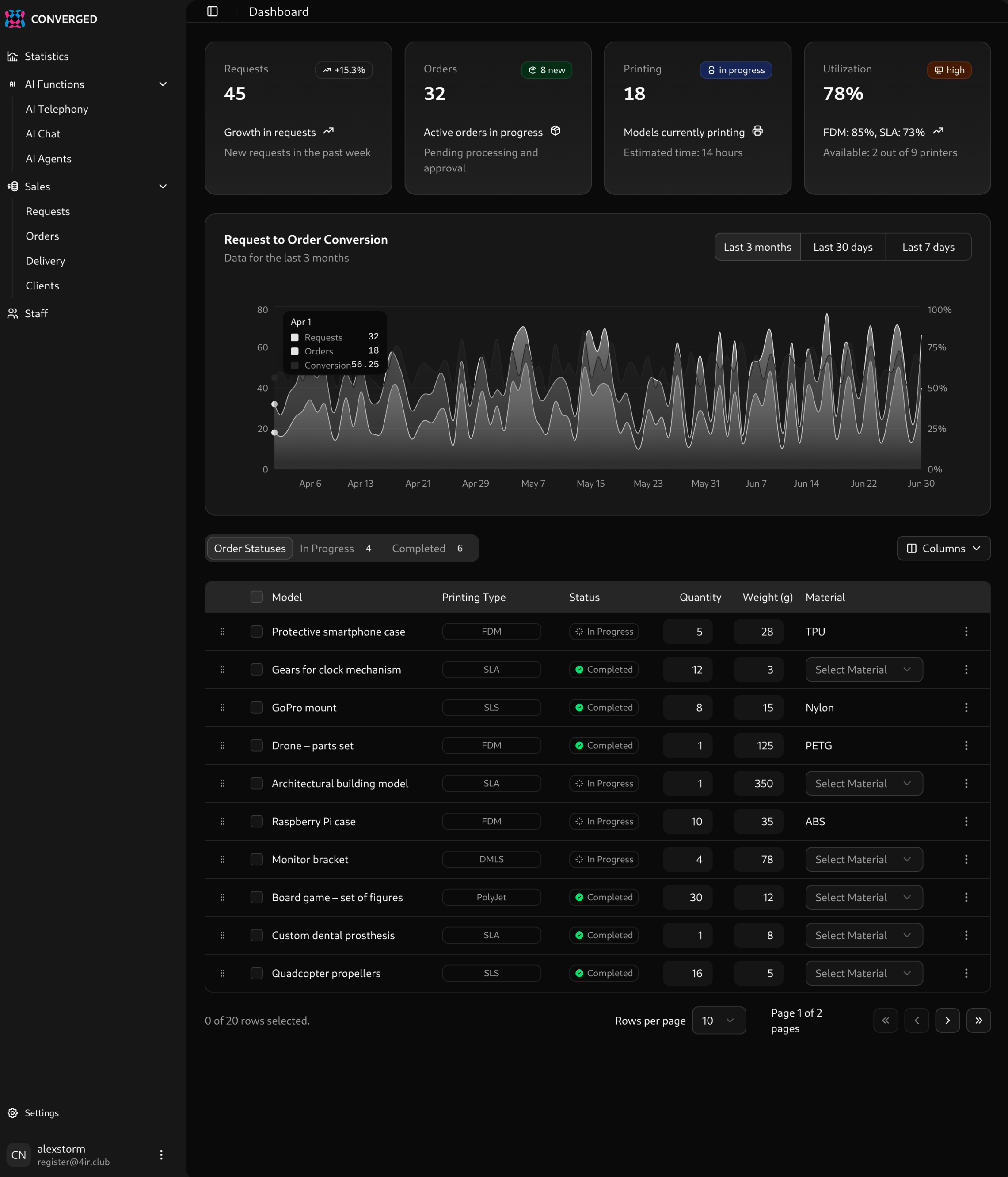

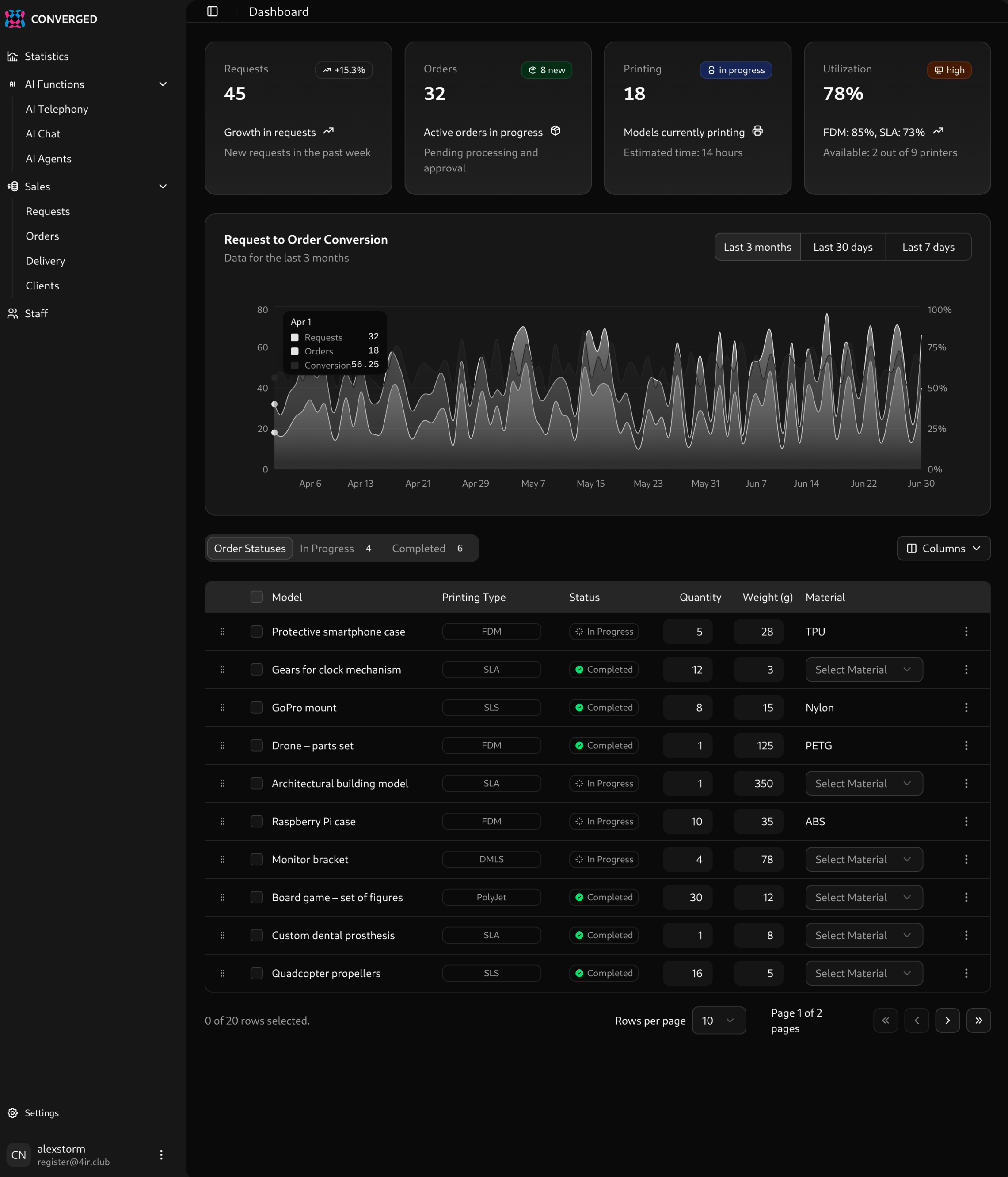

We look at the platform through the customer’s eyes. Businesses do not come for a “files” or “chat” module — they come to solve a concrete task: accept an order, run a model through a slicer, approve pricing, track delivery. That is why the basic unit of value is a Solution, a curated combination of modules that covers the entire scenario.

Today the catalog already includes ready-made bundles for 3D printing service bureaus, contract manufacturers, and R&D teams. Inside you will find file management, customer communication, analytics, and equipment adapters. If the task is unique, you can assemble your own Solution: choose the modules, configure the routes, and save the setup as a team template.

Converged is an open platform, and we rely on contributions from the community. To add a module, build a microservice or micro frontend against the compatible API, publish the source on any Git hosting, and submit it to the catalog. An LLM review automatically scans the repository for binaries, malicious code, or blocking operations for single-threaded Bun. Once verified, the module appears in the catalog and can be plugged into Solutions.

The core codebase is available under an open license. Module authors can choose their own distribution terms, but publishing to the catalog requires transparent source code — it keeps users safe and preserves trust across the ecosystem.

We rejected the usual shared-database approach. The Converged architecture is built on complete isolation:

This architecture gives you unprecedented control:

Converged is released under AGPL-3.0. It is a strict copyleft license: if you modify the platform and expose it over the network, you must publish your changes. This protects the community — the code stays open and improvements return to the ecosystem.

Thanks to the open license, the self-hosted version can be deployed for free with almost the full feature set. It is the path for companies that need total control, alignment with corporate policies, or the freedom to experiment on their own hardware. In exchange you take responsibility for installation, backups, and uptime.

The cloud offering is a turnkey service. It spins up almost instantly, scales automatically, and comes with an SLA. We handle backups, monitoring, and updates, so for most customers this option is cheaper and more stable than building the expertise in-house. Pricing is driven not by the cost of code but by implementation speed and predictable operations.

The project is evolving quickly. The latest roadmap, issues, and discussions live in our GitHub repository.

Join the development and follow updates: https://github.com/solenopsys/converged